Leading the AI Revolution

Generative AI is taking the world by storm. Tools like ChatGPT have shown us the power of this technology. And while GenAI is a game changer, the real revolution won’t happen until businesses and families all over the world use it. This is where data infrastructures become key to enabling change. At Xebia, we have set up data platforms and pipelines for twenty years and can help you bring the GenAI revolution to your company.

How Generative AI Adds Value to Your Business

Generative AI (GenAI) can help businesses increase efficiency, drive innovation, personalize products and services, and gain a competitive advantage. By automating repetitive tasks and providing personalized solutions, generative AI can save businesses time and money while improving customer satisfaction and loyalty. This way, companies offer unique and innovative products to stay ahead of the competition.

You might have heard things about GenAI taking your job, but they are just another method of improving your business if you know how to use them properly, for example, for programming.

The Challenges of Running Generative AI in Production

GenAI models such as LLMs offer us immense help when it comes to drafting anything from replies for customers to summaries of company financial updates. However, these models aren’t easy to work with. It's difficult for engineers to tune models performance and generate stable, reproducible responses. But even if you get an LLM to work exactly as desired, its complexity makes it challenging to deploy and tame in production systems or to gather the computational resources to make it run. Furthermore, deploying GenAI models in production environments raises questions about ensuring data privacy and security, such as protecting the company’s internal data from leaks. So, how can we solve all these issues

Our Proposed Solution

To enable the power of Generative AI in your applications and address the aforementioned challenges, we designed the Gen AI Platform blueprint. This MLOps (Machine Learning Operations) platform, a solution of our Xebia Base, is tailored to help you build Gen AI-powered applications, maintain the complete lifecycle of your models and integrate them with your existing infrastructure. The platform consists of the following components:

.png?width=890&height=161&name=Group%20918%20(1).png)

Model

Depending on your business needs, you may leverage open source (e.g., Falcon) or commercially available foundation models (e.g., OpenAI Chat API, Google PaLM API, Amazon Bedrock, and more). We can help you integrate these out-of-the-box solutions or automate the fine-tuning process on your data for more personalized applications.

Prompts

The key to efficient Generative AI usage is finding the correct prompts. We will provide you with the environment to manage, document, and experiment with prompt templates.

Monitoring

We record prompts, responses, and user feedback to monitor performance and detect model drift. Then, we use the provided information to fine-tune the model.

Interface

We provide you with the interface for the Gen AI model inference with the programmatic API, i.e., a Docker container ready to be deployed as a service. This will give you control over the process and help you optimize for speed.

Cloud and Data Foundation

We supply you with the necessary components to maintain an end-to-end Gen AI model through its entire lifecycle on your infrastructure. We offer you everything from models and prompt storage to fine-tuning automation, inference, and model monitoring.

The Benefits For Your Company

- Model standardization

MLOps frameworks make it easier to train, deploy and update large GenAI models across different environments, reducing operational complexity and improving efficiency. - Version control and collaboration

A GenAI platform enables organizations to transparently update their models, data and prompts, hence enabling collaboration within teams, experiment tracking and the ability to reverse changes. - Model governance and reproducibility

Teams can ensure LLMs are based on well-defined processes and best practices, leading to more reliable and predictable results by monitoring model training, deployment, and updates and providing lineage across the whole lifecycle. - Improved scalability and performance

Managing the entire lifecycle of an LLM with a GenAI platform allows organizations to optimize resource allocation for AI workloads, minimizing latency and maximizing throughput in real-time systems. This also includes optimizing model inference speed and cost for self-hosted models. - Control of the fine-tuning and model inference process

You can ensure privacy and information security during fine-tuning and model inference. - Cost optimization

You can control and optimize the infrastructure cost required to run your Generative AI based application.

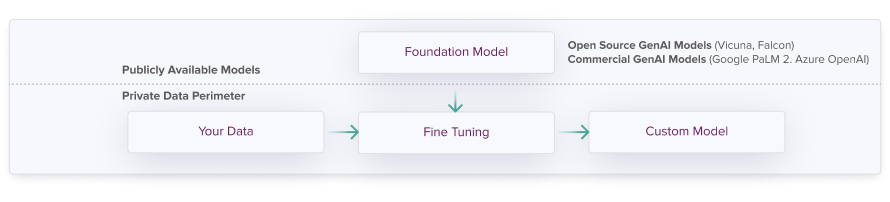

Tuning Whilst Keeping Your Data Private

In the Base Generative AI Platform, we leverage the power of transfer learning, using both commercial Foundation Generative AI products provided by third-party vendors and open-source models. We combine them with your data to adapt the platform to your needs. The components are designed such that the data stays within a private data perimeter.